This is an extract from Discovery, the first in the BDD Books series, available here.

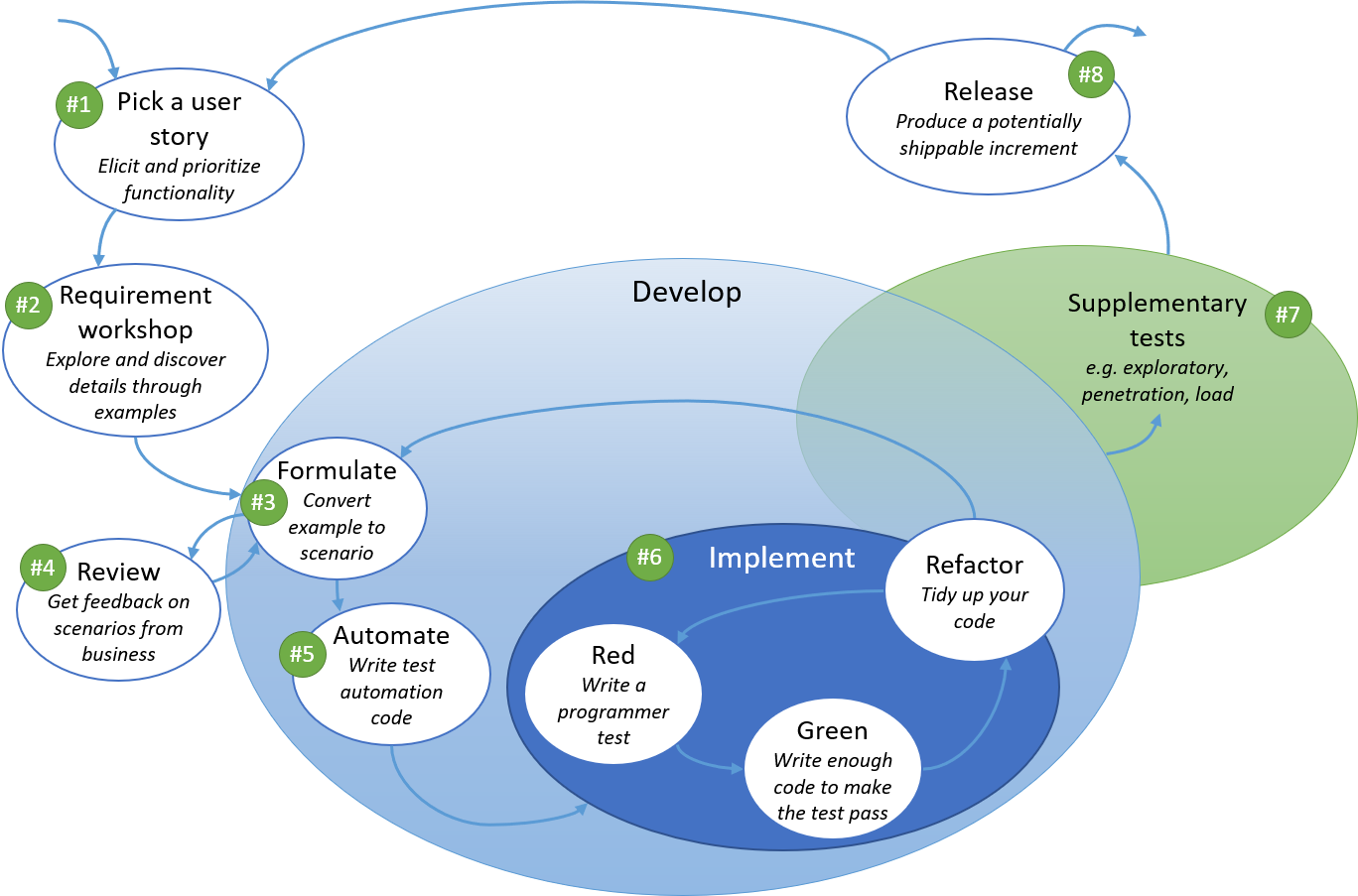

The following list contains a brief overview of the tasks shown in the diagram. In the list we describe the ideal timing, the team members involved and also the expected outcome.

#1 — Pick a user story

What: Requirements elicitation and prioritization.

When: Before the requirement workshop — preferably at least a day in advance.

Who: Product owner, business analyst, customer, key stakeholders

Outcome: A candidate user story, scoped with relevant business rules (acceptance criteria). There should be enough detail to allow the development team to explore the scope of the story using concrete examples.

#2 — Requirement workshop

What: Discuss the user story by focusing on rules and examples.

When: Regularly throughout the project — we recommend running short workshops several times a week.

Who: All roles must be represented at this meeting (at least the three amigos), but multiple representatives of each role can attend. Each role representative has their own responsibility and focus.

Outcome: The candidate story is refined, and is frequently decomposed into smaller stories, each accompanied by a set of rules and examples. The examples should be captured in as light a format as possible, and should not be written using Given/When/Then.

#3 — Formulate

What: Formulate the examples into scenarios.

When: Before the implementation of the story starts. Best done as the first task of development, but sometimes done as a separate workshop where all scenarios scheduled for the iteration are formulated.

Who: In the beginning, when the language and style we use to phrase the scenarios is still being established, it is recommended to do it with the entire team. Later, it can be efficiently done by a pair: a developer (or someone who is responsible for the automation) and a tester (or someone who is responsible for quality) as long as their output is actively reviewed by a business representative.

Outcome: Scenarios added to source control. The language of the scenarios is business readable and consistent.

#4 — Review

What: Review the scenarios to ensure they correctly describe expected business behaviour.

When: Whenever a scenario is written or modified.

Who: Product owner (and maybe business analyst, customer, key stakeholders).

Outcome: Confidence that the development team have correctly understood the requirements of the business; the behaviour is expressed using appropriate terminology.

#5 — Automate

What: Automate the scenarios by writing test automation code.

When: Automate scenarios before starting the implementation, following a test-driven approach. This way the implementation can be "driven" by the scenarios, so the application will be designed for testability and the development team will have greater focus on real outcomes.

Some teams do the automation task separately or even (especially when the application is only automated through the UI) after the implementation is finished. This approach reduces the advantages of BDD, but the end result might be still better than doing ad-hoc integration testing or no testing at all. You may find yourself working in this way as a part of a cultural change, but try to not get stuck here.

Who: Developers or test automation experts. When doing pair programming, pairing a developer with a tester works well.

Whether scenarios should be automated by developers or testers is very dependent on the company culture and the team setup. It is definitely true that for some complex, design-related aspects of the test automation infrastructure, developers are necessary. On the other hand, a good automation infrastructure may enable team members, with less coding experience, to perform automation tasks.

Outcome: Set of (failing) automated scenarios that can be executed both locally and in continuous integration/delivery/deployment environments.

#6 — Implement

What: Implement the application code to make the automated scenarios pass. We show this as the classic TDD cycle of Red/Green/Refactor, with programmer tests driving the implementation.

When: Implementation starts as soon as the first scenario has been automated, so the implementation is being driven by a failing scenario.

Who: Developers.

Outcome: A working application that behaves as specified. This can be verified automatically.

#7 — Supplementary test

What: Perform manual and other forms of testing, described in your testing strategy. This can include scripted, manual testing, exploratory testing, performance, security or other testing. For more details see quadrants 3 and 4 in the Agile Testing Quadrants.

When: You don't have to wait until an entire story is finished. Scenarios provide a functional breakdown of the user story, so each scenario itself contributes a meaningful part of the application's behaviour. Therefore, as soon as there is a completed scenario, testing activities can start. (Test preparation can start even earlier, of course.)

Who: Testers — other team members can help with some aspects of testing, but these activities are usually coordinated by testers.

Outcome: High quality working application; the story is done.

#8 — Release

What: Produce a potentially shippable increment. This is the end of our cycle, but a released product should be used to gather feedback from the users, which can provide input to future development cycles — although this is out of scope for this article.

When: At any time that all tests are passing, but especially at the end of an iteration.

Who: The development team will be responsible for producing the releasable artifacts, but there may be specialized teams that create the actual release package.

Outcome: An installable release package.